Can we put the AI in hAIku?

Tim McCallum

Tim McCallum

spin

haiku

ai

serverless

llm

If you are fond of the linguistic arts, the nexus of haiku poetry and Artificial Intelligence (AI) might intrigue you. There are more complexities to machines composing haikus than initially believed. This article delves into the intricacies of haiku creation and the challenges that computers face in syllable counting and haiku construction. We employ the Large Language Models (LLM) approach for these linguistic feats, and demonstrate how to create and deploy a Spin application to compose haiku poetry.

A Word or Two About the Haiku

Traditionally written haikus capture one or more moments in nature, describing sights, sounds, and the feelings of one’s surroundings. These classic haikus consist of three lines that total seventeen syllables:

- The first line contains five syllables,

- the second has seven syllables, and

- the last line, again, has five syllables.

Surrounded by driverless cars and “smart-everything,” it doesn’t seem like too much of a stretch to imagine that somewhere, an AI application is diligently producing haiku poetry with this five-seven-five (5-7-5) structure. And succeeding.

As it turns out, when it comes to writing haiku, AI has significant room for improvement.

Can Computers Count Syllables?

The challenge of writing haiku poetry primarily lies in syllable counting, which is a multi-step process for a computer, involving a dynamic set of algorithms and the necessity to retain a growing amount of unique algorithms and information.

One rather primitive solution to circumvent the syllable counting issue is to establish a correlation between individual words and their specific syllable counts. A machine could then refer to the stored word-syllable correlation, when juggling words, as part of its haiku composition process. However, there are a few flaws in this approach. Firstly, someone would have to be responsible for creating and maintaining this word-syllable mapping. Secondly, the mapping would have to encompass every word from each language and dialect. Thirdly, the mapping service would need to be available to haiku-writing robots night and day. And so on!

Even if the mapping is possible, further complexities exist. For instance, non-English words employed in the haiku might not conform to the conventional English syllable division rules. Homographs (words spelled identically but pronounced differently) can lead to varied syllable counts. In such instances, the resulting haiku might appear and sound somewhat unfamiliar to a human. Highlighting the fact that perhaps the humble backyard lyricists has an edge over AI in the realm of haiku composition.

The Haiku Conundrum

“Haiku conundrum,

It becomes more and more clear,

More work must be done.”

Indeed, more work must be done. Let’s move on and find out what type of work.

AI Training

AI inference has succeeded at solving many tasks already. However, it requires a lot of pre-training. And a lot of work has to be done before that training can even begin. A human working in AI will perform a substantial amount of manual tasks to generate AI training sets. Examples include looking at images, recognizing an object, and then saving that image to a specific training folder. Then writing information in a metadata file while linking from that metadata file to the specific image file in the training set, and so on … thousands of times!

The goal, that we set as human trainers, is that one day the AI system will entertain us spectacularly with its post-training intelligence. “But couldn’t we get machines to help create the training data?” you may ask. The answer to that is … no! That idea creates a chicken and egg problem. We need to train AI models with accurate training data which can then, later, lead to more accurate outcomes.

Minimal Training

The ultimate goal of AI training and development is to achieve the highest outcome with the least amount of effort. As it turns out, in some cases, AI can learn and act with minimal training data in some areas. There are cases where we simply can not provide enough training data. Examples include facial recognition and personal hand-written signature recognition, where the subject of the inference has not provided their photograph or signature at the scale the AI needs to properly train. In these, and other real-world challenges different approaches such as one-shot and few-shot learning can be employed.

GPT-4, like its predecessor GPT-3, is capable of few-shot learning. More specifically we instructed the model to generalize the new task of writing haiku poetry after seeing only a few examples of the task. Unfortunately, we found out that the result did not yield a high enough result to be deemed successful. Some additional details (of using few-shot learning; where the model generalizes from a handful of examples) are below.

Experiments

We gave the following instructions to ChatGPT4:

You are a haiku poetry-writing bot. Please read the following 10 haiku poems, and then I will ask you to write some haiku poetry for me based on specific subjects. When you write each haiku poem, the first line must contain five syllables, the second line must contain seven syllables, and the last line must contain five syllables.

Haiku poem number 1: Last Red in the sky, a small girl’s moon face rises, over the counter.

Haiku poem number 2: Christmas services, a cellular phone rings out, handel’s messiah.

Haiku poem number 3: Passover darkness, before the buds burst open, a child’s eyes in death.

Haiku poem number 4: Last night of Summer, the bright full moon of last night, hidden by a cloud.

Haiku poem number 5: Midnight and full moon, my neighbour asks to borrow, the vacum cleaner.

Haiku poem number 6: Yellow walnut leaves, slowly appear on the lawn, early morning light.

Haiku poem number 7: After its first flight, the young gerfalcon’s talons, tighter on my glove.

Haiku poem number 8: Sultry afternoon, only the mailbox shadow, crosses the dirt road.

Haiku poem number 9: Long journey back home , a forgotten bale of hay, slowly rots away.

Haiku poem number 10: Autumn mist obscures, the island in the distance, she cleans her glasses.

The initial response (regarding the few-shot learning instructions) showed that the task was understood:

Thank you for sharing those haikus with me.

They paint vivid pictures with their words.

Please provide me with the subjects you'd like the haikus to be based on.

For my experiment, the following instruction, to write a new haiku poem, was provided to ChatGPT-4 a total of 10 times:

Please write a haiku about ChatGPT and Grammarly and AI for me now.

Given the 10 chances to nail down the syllables, the system was unable to write the 5-7-5 format correctly every time. The best result achieved on that occasion was 4 out of 10; which was less than 50% accuracy. The main issue, it turns out was that the system was not able to properly count syllables. While ChatGPT itself can explain in great depth what phonemes are, the results of haiku writing showed that ChatGPT itself was incorrectly counting the syllables in the word “ChatGPT”. For example:

Silent ChatGPT speaks,

Grammarly refines the prose,

AI's dance of words.

As we can see, the above haiku follows a 7-7-5 syllable format. Instead of the 5-7-5, which we wanted.

If we move forward and mix it up a little, we can see that ChatGPT is pretty amazing. It can appear both funny and accurate most of the time:

Write me a haiku about putting IKEA furniture together.

Swedish puzzle parts,

Instructions weave a challenge,

Table stands, complete.

I personally think this is brilliant (and as a bonus conforms to the 5-7-5 that we were chasing), but if we dig a little deeper, and ask for the brand name to be included in the haiku, we see the syllabification issue start to reappear:

Write me a haiku using 5, 7, 5 syllable format and use the brand name IKEA in the poem.

IKEA blue and gold,

Flat boxes turn into dreams,

Home takes shape and form.

This is close, but unfortunately we get a 6-7-5 format. Which brings us back to our original problem of having the correct correlation between individual words and their specific syllable counts.

More Work Must Be Done

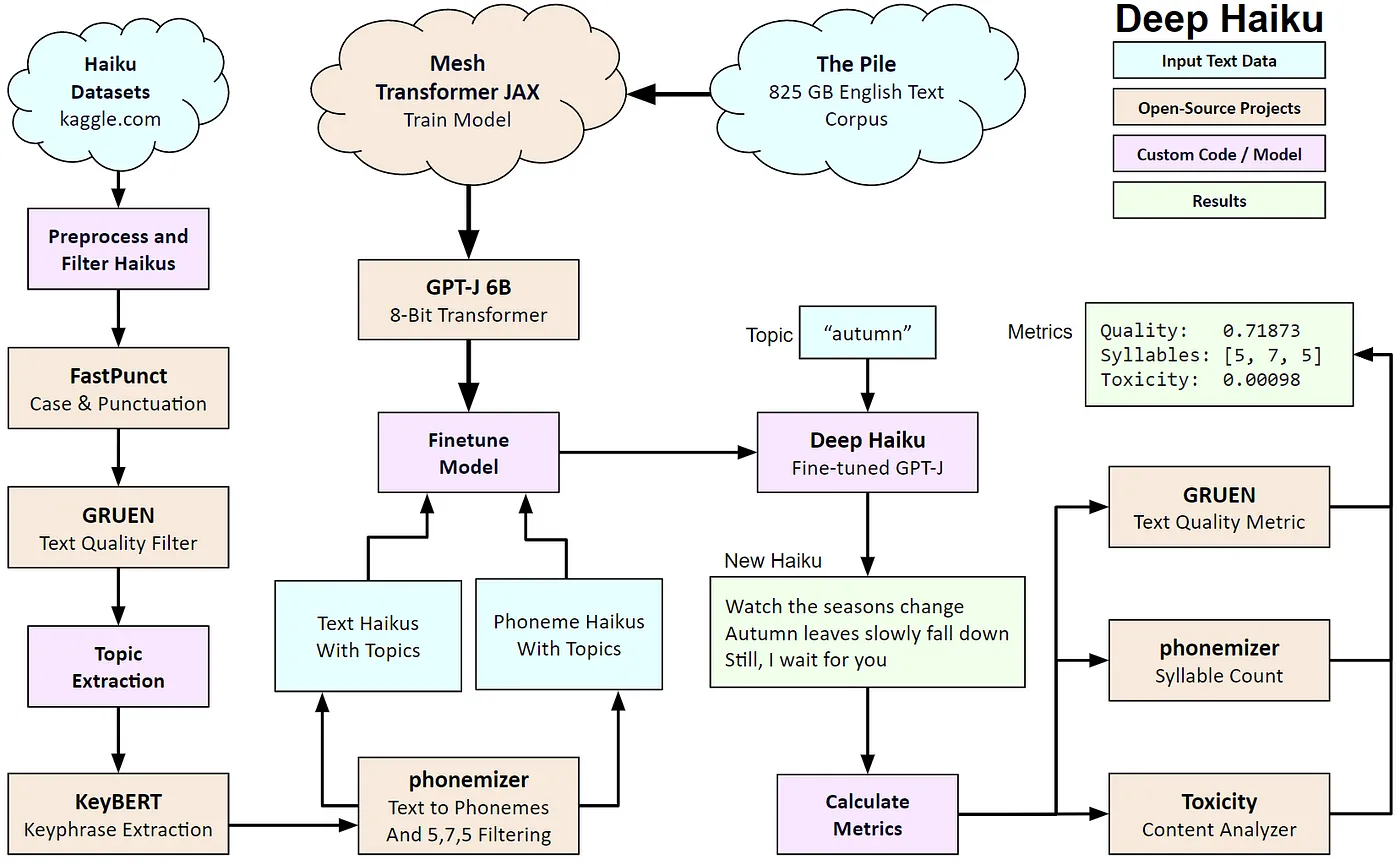

Robert A. Gonsalves has a project called Deep Haiku which provides (what I think is) the most thorough execution of fine-tuning a large transformer to generate rhythmic prose. Deep Haiku, uses a combination of software libraries to assist in the overall process of creating a quality training set. Below is a diagram of the components and processes that Rob uses to train and run Deep Haiku.

The creation of Deep Haiku starts with over 140 thousand Haikus which go through a series of refinement for filtering purposes:

- processing by FastPunct to add punctuation and casing,

- running through a keyword extraction model KeyBERT to extract phrases used as prompts,

- filtering using the GRUEN metric to gauge the quality of the text, and

- processing using the phonemizer library to count the syllables and also convert the prompts and Haikus into phonemes.

After the above steps are carried out, around 26 thousand relatively high-quality Haikus remain. The source code [1] for Rob’s Deep Haiku project is available on GitHub; released under the CC BY-SA license. This is an amazing and inspiring contribution. So I decided to write a Spin application to demonstrate this approach.

Creating a Haiku Writing Application

I started with Rob’s LLaMa2 13B Trained to Write Haikus Given a Topic and then quantized the model in readiness for the Spin framework where I would be creating the Haiku writing application.

NOTE: This tutorial uses Meta AI’s Llama 2, Llama Chat and Code Llama models you will need to visit Meta’s Llama webpage and agree to Meta’s License, Acceptable Use Policy, and to Meta’s privacy policy before fetching and using Llama models.

The application can be found in the ai-examples repository:

$ git clone https://github.com/fermyon/ai-examples.git

$ cd haiku-generator-rs

$ mkdir -p .spin/ai-models

$ cd .spin/ai-models

# Before running the following code, please note that the model is 26GB and will take quite a long time to download (and use a good chunk of your bandwidth and any data download limits you might have).

$ wget wget https://huggingface.co/tpmccallum/llama-2-13b-deep-haiku-GGML/resolve/main/llama-2-13b-deep-haiku.ggml.fp16.bin

# Rename the model to align with the application's configuration

$ mv llama-2-13b-deep-haiku.ggml.fp16.bin llama2-chat

After performing the above steps, we get the following structure in the application’s .spin folder:

$ tree .spin

.spin

└── ai-models

└── llama2-chat

To build the application we use the following commands:

$ cd ../../../haiku-generator-rs

$ spin build --up

Output

To test the application out, we simply make a request using curl and pass in a JSON object; in this case we provided the word “ChatGPT” as the topic.

$ curl --json '{"sentence": "ChatGPT"}' http://localhost:3000/api/haiku-writing

You can imagine my surprise when the following haiku popped out!

“My son and I are

Having an absolute blast

On ChatGPT.”

This is exactly the outcome we dreamed of, 5-7-5 format.

However, and this is a big however, in the name of science, and fairness, I decided to level the playing field by making requests in accordance with what I asked of ChatGPT in our original experiments above. For example:

$ curl --json '{"sentence": "Please write a haiku about ChatGPT and Grammarly and AI for me now."}' http://localhost:3000/api/haiku-writing

The results? … dear reader, reveal that you and I may still have the upper hand over AI when it comes to writing haiku poems!

Rob’s prior work is fantastic and has improved machine-written haiku in a big way. Rather than just being trained on “the internet” Rob has singled out haiku datasets and then pre-cleaned that data through a number of steps (FastPunct, KeyBERT, GRUEN and phonemizer) before even training the AI model. I find that Rob’s trained model is a step ahead of other models that don’t know how to follow the standard meter. In contrast, Deep Haiku is able to use a 5-7-5 meter for a very wide range of topics, almost all of the time. I would especially like to thank Rob for his time and expertise during this process.

The nit that I discovered (with all AI LLMs in general) relates to counting syllables of:

- initialisms (such as “ATM” for Automated Teller Machine, and “UFO” for Unidentified Flying Object),

- acronyms (such as “laser” for Light Amplification by Stimulated Emission of Radiation),

- brand names (such as IKEA), and lastly

- alphanumerics (such as “B2B” for business-to-business, or “H2O” for water).

I feel like the above make up a reasonable amount of our language and therefore there is an additional opportunity for research in this space.

It only seems fitting to wrap up this article with one final haiku: “Haiku Number One” from the legendary poet Dr John Cooper Clarke [2]:

“To freeze the moment

In seventeen syllables

Is very diffic”

He’s not wrong, you know?

References

[1]

@software{DeepHaiku,

author = {Gonsalves, Robert A.},

title = {Deep Haiku: Teaching GPT-J to Compose with Syllable Patterns},

url = {https://github.com/robgon-art/DeepHaiku},

year = 2022,

month = February

}

[2] Clarke, J. C. (2018). The Luckiest Guy Alive. United Kingdom: Pan Macmillan UK.