A Bridge over Troubled Water: Sending Azure Alerts to Slack with Spin

Kate Goldenring

Kate Goldenring

wasm

webassembly

azure

spin

fermyon

Improving the visibility of infrastructure alerts is key to reducing time to response. This is why many operations teams integrate alerts with their team messaging platforms. At Fermyon, we are one of those teams. We pull alerts from all our cloud providers and services into Slack channels. Recently, I ran into a challenge while trying to bridge Azure service alerts into Slack. Azure and Slack expect different JSON formats for messages, which meant I couldn’t simply drop a Slack incoming webhook URL into the Azure Portal. I needed an application to bridge these JSON differences, so I created Azure Slack Bridge, a lightweight, HTTP-triggered transform function. This blog will walk through how to deploy the Azure Slack Bridge Spin application and equip you to build your own service bridges using Spin.

Using Spin as a Service Bridge

Rather than learning a new Azure product, such as Azure Logic Apps, I chose to build a simple Spin application to act as middleware between Azure and Slack. Azure formats alert payloads using its Alert Common Schema, while Slack expects JSON payloads with a single text field. I needed a function to bridge these two formats.

Azure Monitor → Azure Slack Bridge (Spin App) → Slack

│ │ │

(Complex (Parses & (Simple

JSON) Transforms) Message)

Another benefit of this approach is portability and extensibility. Because the bridge is just a Spin application, it isn’t tied to Azure-specific tooling. If you’re operating in a multi-cloud environment, you can easily repurpose or extend the same bridge application to handle alerts from Amazon Web Services, Google Cloud Platform, or other cloud services without having to learn the quirks of yet another managed integration product. This gives you a consistent, reusable way to transform alerts into a Slack-compatible message across cloud providers.

Cloud Service → Slack Bridge (Spin App) → Slack

│ │ │

(JSON) (Parses & (Simple

Transforms) Message)

Abstracting further, we arrive at a general model where Spin applications resolve JSON disparities.

Origin Service → Bridge (Spin App) → Destination Service

│ │ │

(JSON A) (Parses & (JSON B)

Transforms)

So regardless of whether you use Slack or Azure, this blog should give you a toolkit for using Spin applications to bridge your services.

Azure Slack Service Bridge Implementation

The Azure Slack Bridge Spin application is a HTTP-triggered WebAssembly component written in Rust. Its HTTP handler transforms an Azure alert into a Slack message in just five steps:

#[http_component]

fn handle_slack_bridge(req: Request) -> anyhow::Result<impl IntoResponse> {

// 1) Get the Slack webhook URL from a dynamic application variable

let slack_webhook_url = variables::get("slack_webhook_url")?;

// 2) Parse the origin service JSON message from the HTTP request body

let azure_alert = match serde_json::from_slice::<AzureAlert>(req.body()) {

Ok(alert) => alert,

Err(e) => {

eprintln!("Failed to parse Azure alert: {}", e);

return Ok(Response::builder()

.status(400)

.body(format!("Invalid alert payload: {}", e))

.build());

}

};

// 3) Format the origin message for Slack

let slack_text = format_alert_message(&azure_alert);

// 4) Send the message to Slack

let slack_message = SlackMessage { text: slack_text };

let request = Request::builder()

.method(Method::Post)

.body(serde_json::to_vec(&slack_message)?)

.header("Content-type", "application/json")

.uri(slack_webhook_url)

.build();

// 5) Return the result back to the origin service

let response: Response = spin_sdk::http::send(request).await?;

if *response.status() != 200 {

return Ok(Response::new(500, "Failed to send to Slack"));

}

Ok(Response::new(200, ""))

}

Using the Azure Slack Bridge Application to Monitor Cosmos DB

Let’s walk through a common alerting example from Azure Cosmos DB. Cosmos DB is Azure’s fully managed database and one of Spin’s supported Key Value Store providers. This means you can deploy SpinKube to Azure Kubernetes Service and enable applications to use key-value stores with a Cosmos DB backing. With this setup in place, you’d likely want to monitor your infrastructure to track performance and catch issues early.

One common Cosmos DB metric to monitor is a threshold of 429 HTTP status codes, which are emitted when requests are rate-limited. For example, you may want an alert when 100 or more requests are throttled. Let’s see how to bridge that alert to Slack with the Azure Slack Bridge Spin application.

Step 1: Create an Incoming Slack Webhook

Slack supports incoming webhooks to post messages from external services. After creating a webhook, you’ll receive a unique URL to send JSON payloads with simple text messages. Follow this guide to create a Slack app and webhook. Copy the final URL – you’ll set this as the slack_webhook_url variable in the Azure Slack Bridge Spin application.

Step 2: Build and Deploy the Azure Slack Bridge Application

Testing the Application Locally

After installing Spin and creating a Slack incoming Webhook, you can test your application locally. First, build and run the application, setting your Slack Webhook URL as a Spin Variable using the environment variable provider:

git clone https://github.com/kate-goldenring/azure-slack-bridge

cd azure-slack-bridge

SPIN_VARIABLE_SLACK_WEBHOOK_URL="https://hooks.slack.com/services/<...> spin build --up

Next, test your connection to Slack with the example Azure alert payload provided in the repository.

curl -X POST \

-H "Content-Type: application/json" \

-d @sample-alert.json \

localhost:3000

You should see a new message in the Slack channel you configured for the webhook. The message title should be: “⚠️ 🔴 WCUS-R2-Gen2”.

Deploying Your Spin Application

Spin applications can be deployed to any Spin-compatible platform. Many platforms make this process simple with Spin CLI plugins. The following are three options:

-

Deploy to Fermyon Wasm Functions, a multi-tenant, hosted, globally distributed engine for serverless functions running on Akamai Cloud, using the aka plugin:

Note: Currently, Fermyon Wasm Functions is in limited access, public preview, so you must first fill out this form to request access to the service.

spin aka deploy

-

Deploy to Fermyon Cloud, a multi-tenant, hosted, functions service, using the cloud plugin:

spin cloud deploy

-

Your SpinKube cluster, using the the kube plugin:

spin registry push ttl.sh/azure-slack-bridge:24h

spin kube scaffold -f ttl.sh/azure-slack-bridge:24h | kubectl apply -f -

After deploying your application, you should have a URL for your middleware Spin application that you can use when setting up your Alert Group in the next step.

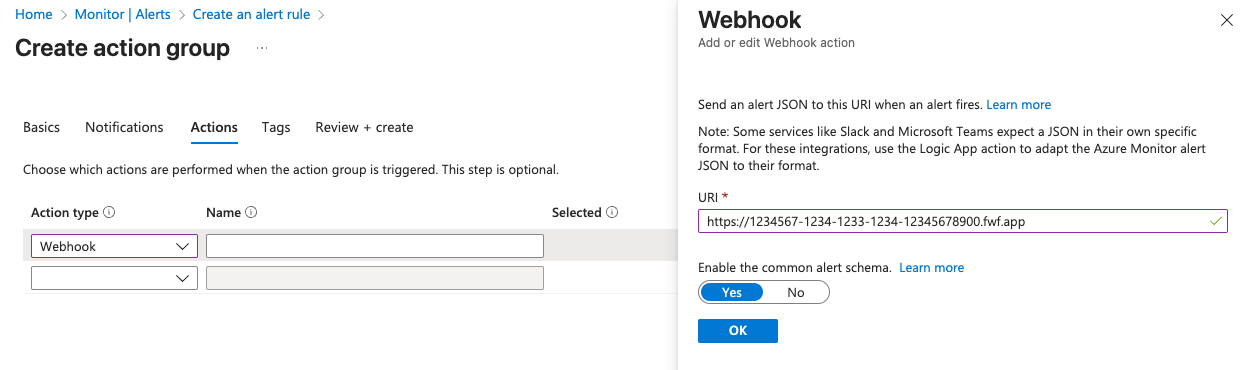

Step 3: Set Up the Azure Alert Pipeline

Follow this Azure tutorial to create an Alert Rule for a threshold of 429 HTTP status codes from Cosmos DB. When creating the rule, link it to an Action Group. This is where you’ll create an Action Group with a Webhook action type. Paste the URL of the deployed application into the URI field of the webhook pane. Be sure to enable the common alert schema for the webhook.

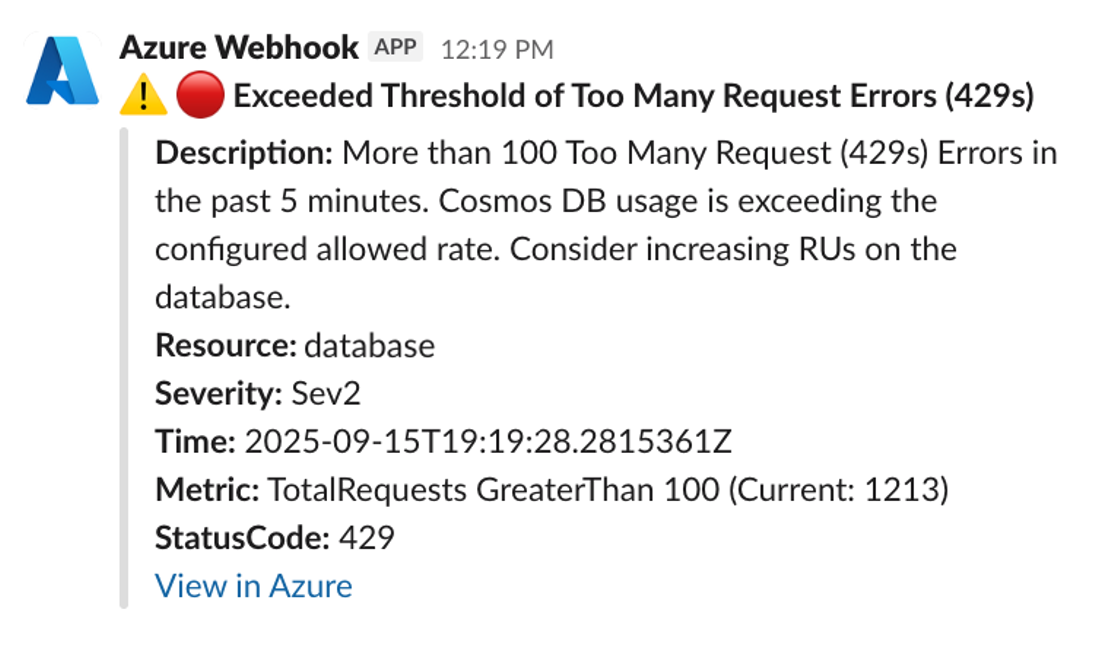

Seeing Alerts in Slack

Now, alerts will be bridged into Slack in clear formatting:

A Bridge over Troubled Water

Bridging Azure alerts into Slack doesn’t need to be complicated. With Spin, you can build lightweight serverless applications that act as middleware between services, transforming JSON payloads, normalizing data formats, and routing events exactly where you need them. While this example focused on Cosmos DB alerts flowing into Slack, the same pattern applies to any Azure service or even other cloud providers.

Try out the Azure Slack Bridge to get started, and then experiment with building your own service bridges to streamline how your team stays informed and responsive.