Azure Cache for Redis as Key-Value Store with SpinKube

Thorsten Hans

Thorsten Hans

SpinKube

Azure

In this article, we will explore how to use Azure Cache for Redis as a key-value store for your Spin Apps when running on Azure Kubernetes Service (AKS) with SpinKube. By the end of the article, you will have a Spin App deployed to an AKS cluster and use Azure Cache for Redis as a transparent cache for an HTTP API. We will also ensure that network connectivity to Azure Cache for Redis is established through the Azure Private Endpoint.

The Cloud (Azure) Infrastructure

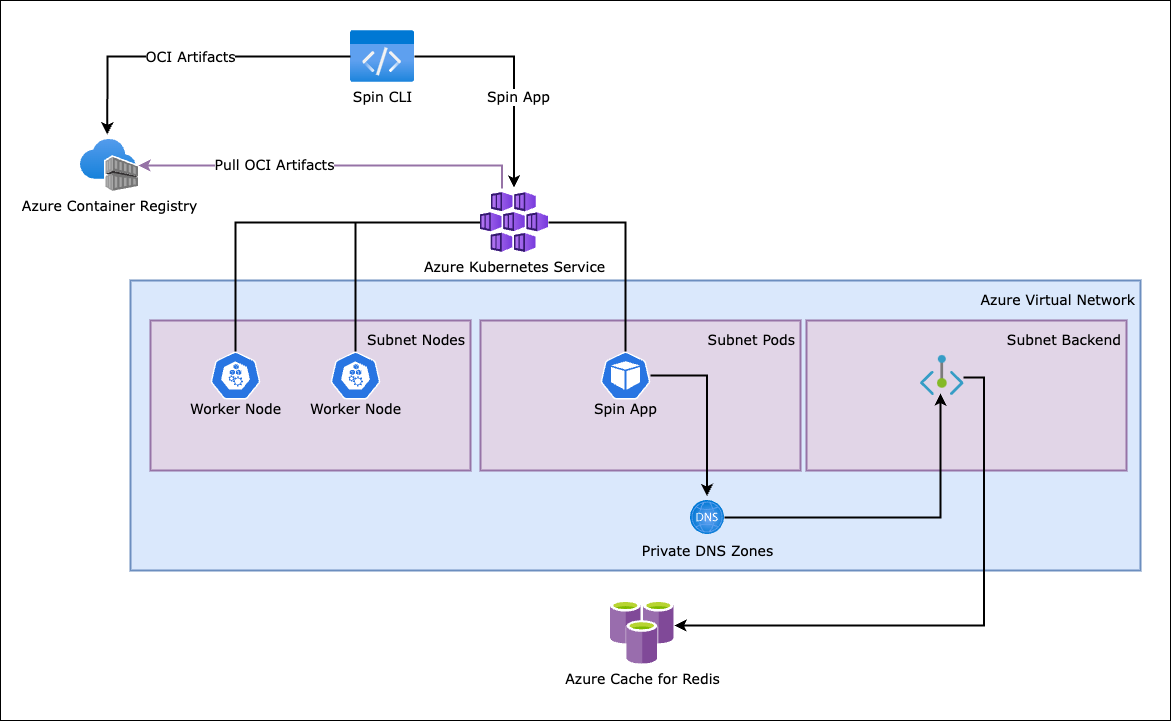

Although we don’t want to go that deep into the underlying Cloud (Azure) infrastructure, it’s worth outlining the most important counterparts of the cloud infrastructure to have a common understanding of how you can replicate this setup in your environment (Azure Subscription).

For building production grade cloud infrastructures in Azure, we highly recommend exploring reference architectures provided by Microsoft. Consider exploring sources like the Azure Well-Architected Framework (WAF) or other official blueprints.

Let’s quickly highlight some of the components from the infrastructure diagram shown above:

- The AKS cluster is using

Azure CNI network plugin with dynamic IP allocation and integrates with an Azure Virtual Network (vNet) which provides dedicated subnets for Kubernetes Worker Nodes and Pods.

- Azure Cache for Redis is integrated with a dedicated subnet (

sn-backend) via Azure Private Endpoint and public network access is disabled.

- Although Azure Container Registry (ACR) could be integrated with the vNet, we decided to keep it accessible from outside the vNet to simplify the process of pushing new OCI artifacts to it for the sake of demonstration.

Provisioning the Cloud Infrastructure

Use the following shell script to provision the cloud infrastructure to your Azure Subscription:

#!/bin/bash

set -euo pipefail

# Variables

AKS_NAME=aks-spin-demo

ACR_NAME=spin0524

RG_NAME=rg-spin-demo

REDIS_NAME=spin0524

VNET_NAME=vnet-spin-demo

LOCATION=germanywestcentral

ACR__TOKEN_NAME=spincli

AKS__VM_SIZE=Standard_D4_v5

AKS__NODEPOOL_NAME=spinpool

# Azure Resource Group

az group create -n $RG_NAME -l $LOCATION -onone

# Azure Virtual Network

az network vnet create -n $VNET_NAME \

-g $RG_NAME -l $LOCATION \

--address-prefixes "10.0.0.0/8" --subnet-name sn-nodes \

--subnet-prefix "10.100.0.0/16" -onone

az network vnet subnet create -n sn-pods \

--vnet-name $VNET_NAME -g $RG_NAME \

--address-prefix "10.120.0.0/16" -onone

az network vnet subnet create -n sn-backend \

--vnet-name $VNET_NAME -g $RG_NAME \

--address-prefixes "10.200.0.0/16" \

--private-endpoint-network-policies Disabled -onone

sn_nodes_id=$(az network vnet subnet show -n sn-nodes \

--vnet-name $VNET_NAME -g $RG_NAME -otsv --query "id")

sn_pods_id=$(az network vnet subnet show -n sn-pods \

--vnet-name $VNET_NAME -g $RG_NAME -otsv --query "id")

# Azure Cache for Redis

# Deploying an Azure Cache for Redis instance takes a while (30mins)

redis_id=$(az redis create -n $REDIS_NAME -g $RG_NAME \

-l $LOCATION --sku Standard --vm-size c1 --query "id" -otsv)

az network private-dns zone create -n privatelink.redis.cache.windows.net \

-g $RG_NAME -onone

az network private-dns link vnet create -n pl-redis-$VNET_NAME \

-g $RG_NAME --virtual-network $VNET_NAME \

--zone-name privatelink.redis.cache.windows.net \

--registration-enabled no -onone

az network private-endpoint create -n pe-redis-$REDIS_NAME \

-g $RG_NAME -l $LOCATION \

--vnet-name $VNET_NAME --subnet sn-backend \

--private-connection-resource-id $redis_id \

--group-ids redisCache --connection-name pl-redis-$VNET_NAME \

-onone

az network private-endpoint dns-zone-group create -n dns-zg-redis \

-g $RG_NAME --endpoint-name pe-redis-$REDIS_NAME \

--private-dns-zone "privatelink.redis.cache.windows.net" \

--zone-name $REDIS_NAME -onone

# Azure Container Registry

acr_id=$(az acr create -n $ACR_NAME -g $RG_NAME -l $LOCATION \

--sku Standard --admin-enabled false --query "id" -otsv)

acr_password=$(az acr token create --name $ACR__TOKEN_NAME \

-r $ACR_NAME --scope-map _repositories_push \

--query "credentials.passwords[0].value" -otsv)

echo "Use $ACR__TOKEN_NAME and $acr_password to authenticate at $ACR_NAME.azurecr.io"

# Azure Kubernetes Service

az aks create -n $AKS_NAME -g $RG_NAME -l $LOCATION \

--attach-acr $acr_id --network-plugin azure \

--vnet-subnet-id $sn_nodes_id --pod-subnet-id $sn_pods_id \

--os-sku AzureLinux --nodepool-name $AKS__NODEPOOL_NAME \

--node-vm-size $AKS__VM_SIZE --node-count 1 --max-pods 250 \

--generate-ssh-keys -onone

az aks get-credentials -n $AKS_NAME -g $RG_NAME

For real-world scenarios and production environments you should consider using techniques like Infrastructure as Code (IaC) and tools like Terraform, Pulumi, or Bicep to provision and manage your cloud infrastructure.

Deploy SpinKube on Azure Kubernetes Service

We use SpinKube to run WebAssembly workloads (Spin Apps) natively on AKS. Consider exploring the SpinKube documentation to learn more about what SpinKube is, of which components it consists, and to explore different ways of installing it.

Important: This tutorial relies on the most recent release (v0.2.0) of the Spin Operator

For executing the following script, ensure you’ve installed the helm and kubectl CLIs on your machine, and double-check that your kubectl context points to the AKS cluster provisioned in the previous section of this article:

#!/bin/bash

set -euo pipefail

SPINKUBE_VERSION=0.2.0

CERT_MANAGER_VERSION=1.14.3

# Install CRDs

kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v$CERT_MANAGER_VERSION/cert-manager.crds.yaml

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v$SPINKUBE_VERSION/spin-operator.crds.yaml

# Install RuntimeClass and SpinAppExecutor

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v$SPINKUBE_VERSION/spin-operator.runtime-class.yaml

kubectl apply -f https://github.com/spinkube/spin-operator/releases/download/v$SPINKUBE_VERSION/spin-operator.shim-executor.yaml

# Add Helm repositories and update repository feeds

helm repo add --force-update jetstack https://charts.jetstack.io

helm repo add --force-update kwasm http://kwasm.sh/kwasm-operator/

helm repo add --force-update grafana https://grafana.github.io/helm-charts

helm repo update

# Install cert-manager

helm upgrade --install cert-manager jetstack/cert-manager \--namespace cert-manager \--create-namespace --version v$CERT_MANAGER_VERSION

# Install Kwasm

helm upgrade --install kwasm-operator kwasm/kwasm-operator \--namespace kwasm --create-namespace --set kwasmOperator.installerImage=ghcr.io/spinkube/containerd-shim-spin/node-installer:v0.14.1

# Annotating Kubernetes Nodes

kubectl annotate node --all kwasm.sh/kwasm-node=true

# Installing Spin Operator

helm upgrade --install spin-operator --namespace spin-operator \--create-namespace --version $SPINKUBE_VERSION \--wait oci://ghcr.io/spinkube/charts/spin-operator

You finished the cloud infrastructure and cluster setup! Now, let’s move on and start implementing the Spin App.

Implementing the Spin App

You can build serverless WebAssembly workloads with Spin, choosing from a wide range of different programming languages and templates. For now, you will create a Spin App using (Tiny)Go. Please ensure you have installed the following software on your machine:

Start by creating the new Spin App using spin new and the http-go template:

# Create a new Spin App using the http-go template

spin new -t http-go -a cache-with-az-redis

# Move into the app directory

cd cache-with-az-redis

The HTTP API you are going to implement will expose the following HTTP endpoints:

GET /items - Returns a list of Items DELETE /cache - Wipes the underlying cache (key-value store)

The Spin SDK for Go provides the necessary capabilities for implementing full-fledged serverless APIs with ease. Replace the code in main.go with the following:

package main

import (

"encoding/json"

"net/http"

spinhttp "github.com/fermyon/spin/sdk/go/v2/http"

"github.com/fermyon/spin/sdk/go/v2/kv"

)

const (

CACHE_KEY_ALL_ITEMS = "ALL_ITEMS"

KV_STORE_NAME = "default"

HEADER_NAME_SERVED_FROM_CACHE = "X-Served-From-Cache"

HEADER_NAME_CONTENT_TYPE = "Content-Type"

CONTENT_TYPE_JSON = "application/json"

)

func init() {

spinhttp.Handle(func(w http.ResponseWriter, r *http.Request) {

router := spinhttp.NewRouter()

router.GET("/items", getItems)

router.DELETE("/cache", invalidateCache)

router.ServeHTTP(w, r)

})

}

func getItems(w http.ResponseWriter, r *http.Request, params spinhttp.Params) {

store, err := kv.OpenStore(KV_STORE_NAME)

if err != nil {

http.Error(w, "Error while opening KV store", 500)

return

}

exists, err := store.Exists(CACHE_KEY_ALL_ITEMS)

if err != nil {

http.Error(w, "Error while checking KV store for key", 500)

return

}

if exists {

returnFromCache(store, CACHE_KEY_ALL_ITEMS, w)

return

}

all := loadItems()

payload, err := json.Marshal(all)

if err != nil {

http.Error(w, "Error while encoding items", 500)

return

}

store.Set(CACHE_KEY_ALL_ITEMS, payload)

header := w.Header()

header.Set(HEADER_NAME_CONTENT_TYPE, CONTENT_TYPE_JSON)

header.Set(HEADER_NAME_SERVED_FROM_CACHE, "false")

w.Write(payload)

}

func invalidateCache(w http.ResponseWriter, r *http.Request, params spinhttp.Params) {

store, err := kv.OpenStore(KV_STORE_NAME)

if err != nil {

http.Error(w, "Error while opening KV store", 500)

return

}

err = store.Delete(CACHE_KEY_ALL_ITEMS)

if err != nil {

http.Error(w, "Error while removing item from KV store", 500)

return

}

w.WriteHeader(204)

}

func loadItems() []item {

return []item{

item{Id: 1, Title: "Black Coffee"},

item{Id: 2, Title: "Latte"},

item{Id: 3, Title: "Caramel Latte"},

item{Id: 4, Title: "Cappuccino"},

item{Id: 5, Title: "Americano"},

item{Id: 6, Title: "Espresso"},

}

}

func returnFromCache(store *kv.Store, key string, w http.ResponseWriter) {

all, err := store.Get(key)

if err != nil {

http.Error(w, "Error while loading items from kv store", 500)

return

}

h := w.Header()

h.Set(HEADER_NAME_CONTENT_TYPE, CONTENT_TYPE_JSON)

h.Set(HEADER_NAME_SERVED_FROM_CACHE, "true")

w.Write(all)

}

type item struct {

Id int32 `json:"id"`

Title string `json:"title"`

}

func main() {}

The code above uses the Router to register API endpoints, and it leverages the kv module to implement transparent caching based on a key-value store.

Due to the nature of WebAssembly, every Spin App is executed in a strict sandbox, which means you have to explicitly grant your Spin App the permission to use the key-value store. Spin streamlines this experience a lot – all you have to do is add a key_value_stores property in the Spin Manifest (spin.toml) and provide the names of the key-value stores you want your Spin App to use:

spin_manifest_version = 2

[application]

name = "cache-with-az-redis"

version = "0.1.0"

authors = ["Thorsten Hans <thorsten.hans@fermyon.com>"]

description = ""

[[trigger.http]]

route = "/..."

component = "cache-with-az-redis"

[component.cache-with-az-redis]

source = "main.wasm"

allowed_outbound_hosts = []

key_value_stores = ["default"]

[component.cache-with-az-redis.build]

command = "tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go"

watch = ["**/*.go", "go.mod"]

Finally, compile the Spin App using spin build:

# Build the Spin App

spin build

Building component cache-with-az-redis with `tinygo build -target=wasi -gc=leaking -no-debug -o main.wasm main.go`

Finished building all Spin components

Run the App locally using SQLite as key-value store

Let’s test the Spin App locally. Instead of using Azure Cache for Redis when running locally, your Spin App can rely on SQLite as a key-value store and have spin taking care of everything. This streamlines the inner-loop experience and allows you to remain focused on implementing the Spin App instead of managing necessary infrastructure components. Running the Spin App on your machine is as simple as invoking spin up:

# Run the Spin App

spin up

Logging component stdio to ".spin/logs/"

Storing default key-value data to ".spin/sqlite_key_value.db"

Serving http://127.0.0.1:3000

Available Routes:

cache-with-az-redis: http://127.0.0.1:3000 (wildcard)

Use curl (or a similar tool) to invoke the endpoints provided by the Spin App and verify the x-served-from-cache HTTP header is specifying true for subsequent invocations of the GET /items endpoint:

# Invoke GET /items for the 1st time

# We DO NOT expect x-served-from-cache: true as HTTP Response Header

curl -iX GET http://localhost:3000/items

HTTP/1.1 200 OK

content-type: application/json

x-served-from-cache: false

content-length: 178

date: Mon, 13 May 2024 09:05:38 GMT

[{"id":1,"title":"Black Coffee"},{"id":2,"title":"Latte"},{"id":3,"title":"Caramel Latte"},{"id":4,"title":"Cappuccino"},{"id":5,"title":"Americano"},{"id":6,"title":"Espresso"}]

# Invoke GET /items for the 2nd time

# We DO expect x-served-from-cache: true as HTTP Response Header

curl -iX GET http://localhost:3000/items

HTTP/1.1 200 OK

content-type: application/json

x-served-from-cache: true

content-length: 178

date: Mon, 13 May 2024 09:06:50 GMT

[{"id":1,"title":"Black Coffee"},{"id":2,"title":"Latte"},{"id":3,"title":"Caramel Latte"},{"id":4,"title":"Cappuccino"},{"id":5,"title":"Americano"},{"id":6,"title":"Espresso"}]

To wipe the cache (key-value store), you send a DELETE request to the /cache endpoint as shown here:

# Wipe the Cache (key-value store)

curl -iX DELETE http://localhost:3000/cache

HTTP/1.1 204 No Content

date: Mon, 13 May 2024 09:07:23 GMT

Creating a Runtime Configuration file

To use Azure Cache for Redis as a key-value store, you don’t have to make any code changes. Instead, you provide a dedicated Runtime Configuration that instructs Spin to use the managed service instead of SQLite. Before creating the Runtime Configuration file, let’s grab the primary key for accessing Azure Cache for Redis:

# Grab the Redis Password and Store it in a variable

redis_password=$(az redis list-keys --name $REDIS_NAME -g $RG_NAME --query "primaryKey" -otsv)

The Runtime Configuration file for Spin is a regular .toml file. Create a new file (az.toml) and provide the following content:

[key_value_store.default]

type = "redis"

url = "rediss://:<YOUR_REDIS_PASSWORD>@<YOUR_REDIS_NAME>.redis.cache.windows.net:6380"

Replace <YOUR_REDIS_PASSWORD> with the value of $redis_password and <YOUR_REDIS_NAME> with the value of $REDIS_NAME.

Also note the protocol being rediss instead of redis indicating that the outbound connection is using TLS.

Whitelist Outbound Network Connectivity

Again, due to strict sandboxing of WebAssembly Modules, you must allow the Spin App to establish outbound network connectivity to your instance of Azure Cache for Redis. Modify the Spin Manifest (spin.toml), and update the allowed_outbound_hosts property of the cache-with-az-redis component:

[component.cache-with-az-redis]

source = "main.wasm"

allowed_outbound_hosts = ["redis://<YOUR_REDIS_NAME>.redis.cache.windows.net:6380"]

key_value_stores = ["default"]

Replace <YOUR_REDIS_NAME> with the value of $REDIS_NAME

Deploying the Spin App

Spin Apps are distributed via OCI artifacts. Before you can push the OCI artifact representing your Spin App to ACR, you must authenticate using the spin registry login command:

# Authenticate with ACR

spin registry login -u $ACR__TOKEN_NAME -p <ACR_PASSWORD> $ACR_NAME.azurecr.io

Once you’ve authenticated, you can push the Spin App to ACR. Specifying the --build flag as part of spin registry push guarantees that the most recent source code is compiled down to WebAssembly before packaging and pushing the OCI artifact:

# Build and Push the Spin App as OCI artifact

spin registry push --build $ACR_NAME.azurecr.io/cache-with-az-redis:0.0.1

Once the OCI artifact is persisted in ACR, you can use the kube plugin for spin to scaffold the necessary Kubernetes deployment manifests. Use the --runtime-config-file (-c) flag - in addition to the --from (-f) flag - to ensure the custom Runtime Configuration File (az.toml) is used to create the necessary Kubernetes Secret:

# Scaffold Kubernetes Deployment Manifests

# and store them in spinapp.yaml

spin kube scaffold -f $ACR_NAME.azurecr.io/cache-with-az-redis:0.0.1 \

-c az.toml > spinapp.yaml

With the Kubernetes Deployment manifests being written to spinapp.yaml, you can use kubectl apply to deploy the Spin App on the AKS cluster:

# Deploy the Spin App to AKS

kubectl apply -f spinapp.yaml

spinapp.core.spinoperator.dev/cache-with-az-redis created

secret/cache-with-az-redis-runtime-config created

Testing the Spin App running in Azure

Because the cloud infrastructure does not contain an Ingress Controller (e.g., Azure Application Gateway in the case of Azure), you must use port-forwarding to invoke your Spin App running on AKS.

You can either configure port-forwarding to one of the Pods being provisioned by Spin Operator, or you can establish port-forwarding to the corresponding Kubernetes Service (which is also provisioned and managed by Spin Operator automatically):

# Configure Port Forwarding to the Kubernetes Service

kubectl port-forward svc/cache-with-az-redis 8080:80

Forwarding from 127.0.0.1:8080 -> 80

Forwarding from [::1]:8080 -> 80

From within an additional terminal, you can use curl to invoke the HTTP endpoints as shown here:

## Invoke the GET /items endpoint for the 1st time

curl -iX GET http://localhost:8080/items

HTTP/1.1 200 OK

content-type: application/json

x-served-from-cache: false

content-length: 178

date: Mon, 13 May 2024 09:42:38 GMT

[{"id":1,"title":"Black Coffee"},{"id":2,"title":"Latte"},{"id":3,"title":"Caramel Latte"},{"id":4,"title":"Cappuccino"},{"id":5,"title":"Americano"},{"id":6,"title":"Espresso"}]

## Invoke the GET /items endpoint for the 2nd time

curl -iX GET http://localhost:8080/items

HTTP/1.1 200 OK

content-type: application/json

x-served-from-cache: true

content-length: 178

date: Mon, 13 May 2024 09:44:50 GMT

[{"id":1,"title":"Black Coffee"},{"id":2,"title":"Latte"},{"id":3,"title":"Caramel Latte"},{"id":4,"title":"Cappuccino"},{"id":5,"title":"Americano"},{"id":6,"title":"Espresso"}]

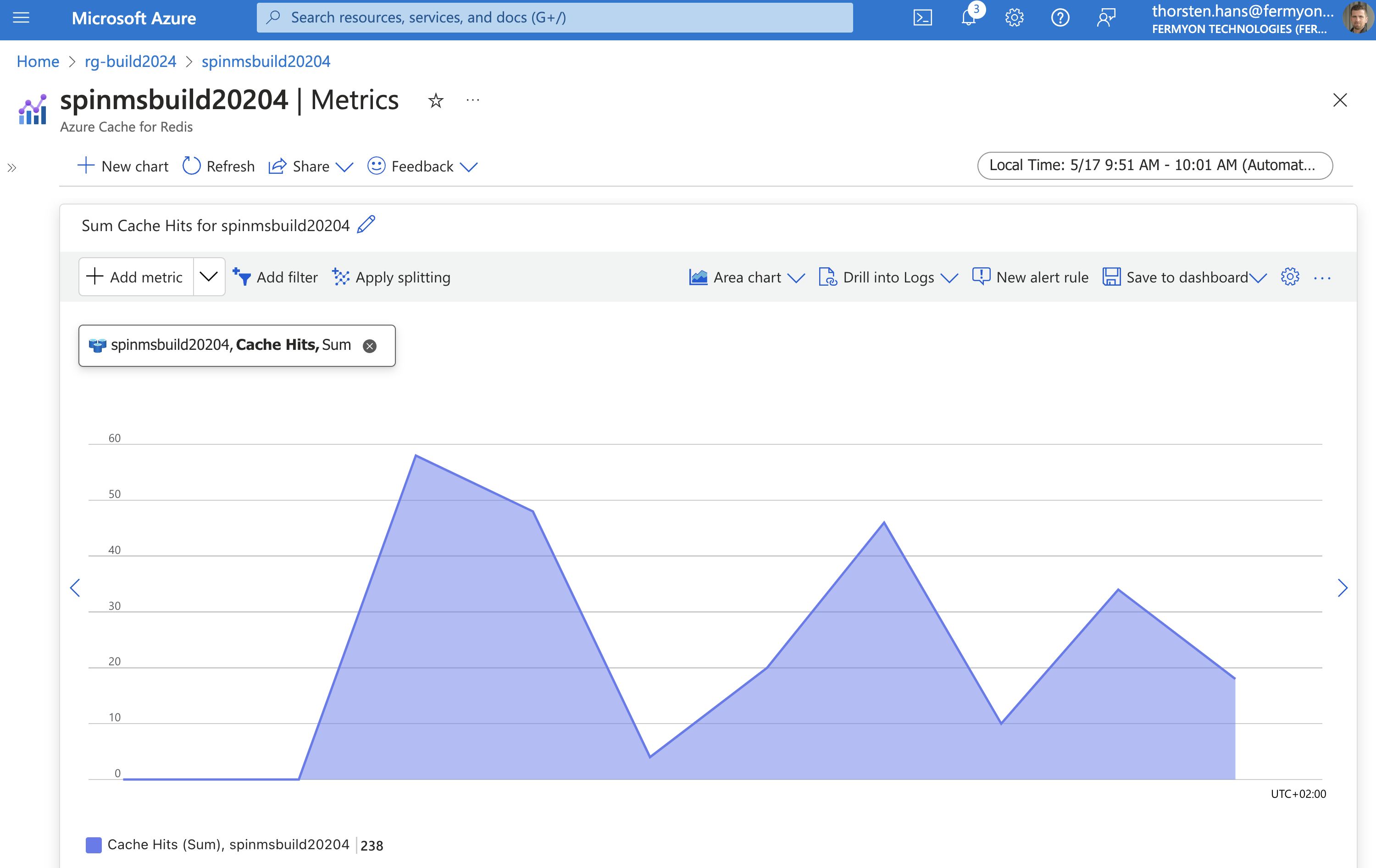

Finally, let’s verify that our Azure Cache for Redis instance has been used as a key-value store. The easiest way to do this is by using the Azure Portal and inspecting the Metrics of the Azure Cache for Redis instance. (Keep in mind that metrics need a couple of minutes to be shown in Azure Portal). Finally, you should see some Cache Hits (actual count of cache hits depends on how many requests you sent to the GET /items endpoint).

Removing the Cloud Infrastructure

To remove the cloud infrastructure you’ve provisioned as part of this article, you can use the following script:

#! /bin/bash

set -euo pipefail

RG_NAME=rg-spin-demo

echo "Deleting Azure Resource Group '$RG_NAME' ..."

az group delete -n $RG_NAME

Conclusion

Integrating Spin Apps with surrounding cloud services when running on SpinKube is mission-critical when building real-world applications. Azure Cache for Redis is a great example for using Platform-as-a-Service (PaaS) services when building serverless workloads with Spin. The ability to alter runtime behavior by providing environment-specific Runtime Configuration files - without having to recompile the app to Wasm makes your Spin Apps portable across different environments and platforms.