Build AI Agents with Spin and the OpenAI Agents SDK

Thorsten Hans

Thorsten Hans

Spin

AI

TypeScript

Agents are everywhere! You likely interact with them everyday, from copilots and chat assistants to automated workflows. Not a single day passes without another major announcement in the AI realm that does not involve agents. In today’s post we’ll explore how you could build a custom AI agent using the OpenAI Agents SDK with Spin and run it on truly serverless, globally distributed Fermyon Wasm Functions.

What Are AI Agents BTW?

AI agents are programs powered by large language models (LLMs) that don’t just generate text but can reason, plan, and take actions. Instead of hardcoding logic, you describe what the agent should do, and it figures out how to accomplish tasks, often by calling tools or APIs.

Think of them as an orchestration layer where the LLM handles decision-making, while your code provides capabilities. For developers, this means moving from writing if/else flows to defining reusable building blocks the agent can leverage dynamically.

What is The OpenAI Agents SDK?

The OpenAI Agents SDK is a set of APIs that makes it easy to build, run, and customize AI agents inside your own applications. It provides abstractions for defining agents, registering tools, and handling handoffs between LLM-driven reasoning and deterministic code.

With TypeScript support, you can wire agents directly into modern serverless and edge-native apps without reinventing orchestration logic. For developers, it removes boilerplate and lets you focus on defining the what and how of your agent’s capabilities.

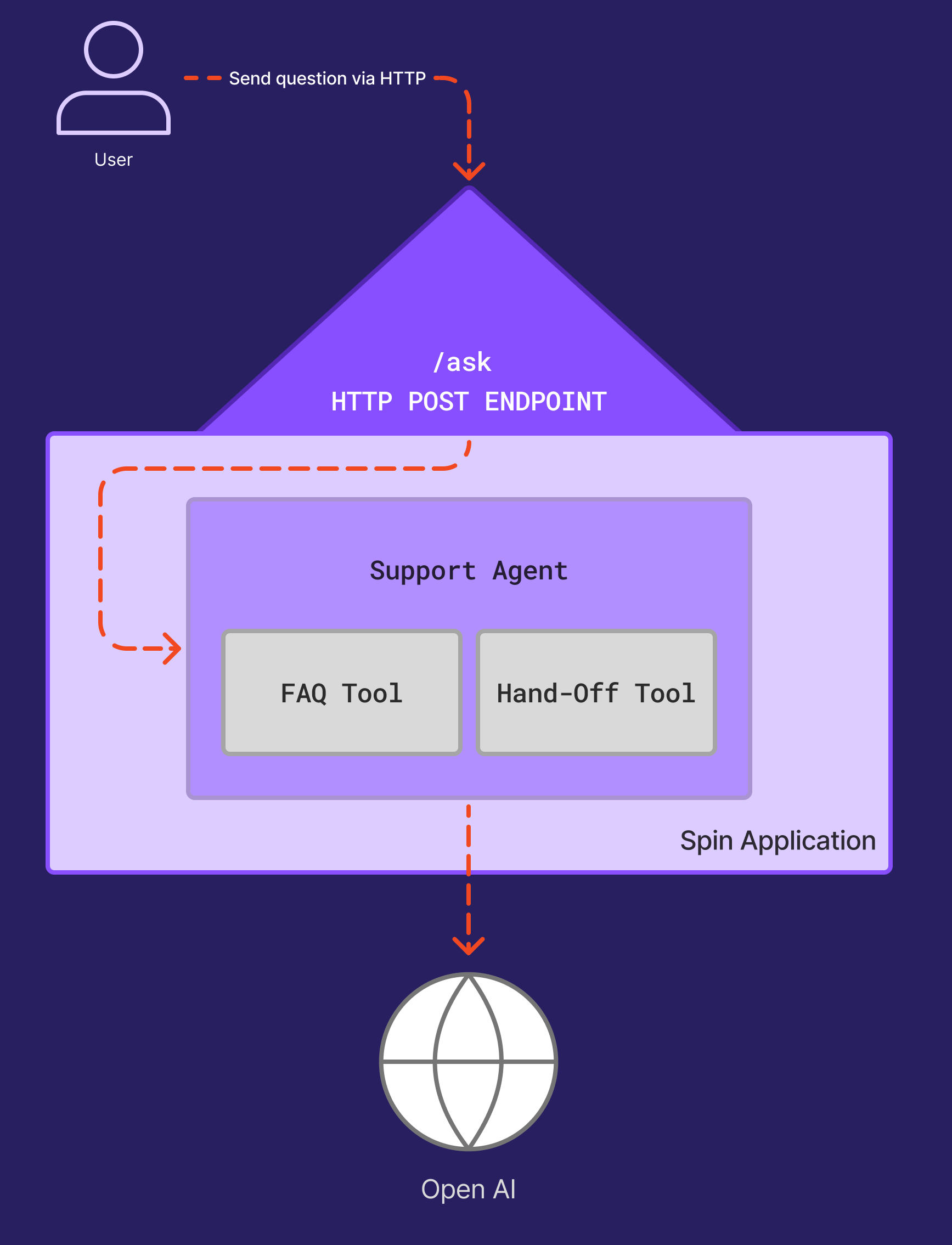

Now that we know what AI agents are, we’ll use the OpenAI Agents SDK to build a simple “Support Agent” with Spin and Fermyon Wasm Functions. Running your agent as a serverless function makes it instantly scalable and cost-efficient. It spins up when needed, handles requests in milliseconds, and shuts down when idle. It’s an ideal way to power lightweight, event-driven AI experiences without managing infrastructure, while keeping compute close to users for fast, secure responses.

Let’s get started!

Scaffolding the Spin Support Agent Application

First, we’ll create a new TypeScript Spin application using the spin new command, we’ll also run spin build which will install necessary dependencies before compiling the skeleton down to WebAssembly. Finally, we’ll install the OpenAI Agents SDK @openai/agents:

Although the Spin CLI comes with a bunch of default templates installed, you could also roll your own templates as we explained in the “Authoring Custom Spin Templates” article.

spin new -t http-ts --value http-router=hono -a support-agent

cd support-agent

spin build

At this point, you should see an output similar to this:

Building component support-agent (2 commands)

Running build step 1/2 for component support-agent with 'npm install'

added 201 packages, and audited 202 packages in 13s

43 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

Running build step 2/2 for component support-agent with 'npm run build'

> support-agent@1.0.0 build

> npx webpack && mkdirp dist && j2w -i build/bundle.js -o dist/support-agent.wasm

Componentizing...

Component successfully written.

Finished building all Spin components

Install the Spin Variables package and the OpenAI Agents SDK using npm:

npm install @spinframework/spin-variables

npm install @openai/agents

With the project created and all necessary dependencies installed, we can move on and start implementing our support agent.

Implementing the Spin Support Agent

First, let’s design the public API of our Spin application. All we need is a POST endpoint that accepts incoming user questions. That said, start by changing src/index.ts to the following:

import { Hono } from 'hono';

import { fire } from 'hono/service-worker';

import type { Context } from 'hono';

import { logger } from 'hono/logger';

interface QuestionModel {

question: string

}

let app = new Hono();

app.use(logger());

app.post("/ask", async (c: Context) => {

try {

const body = await c.req.json<QuestionModel>();

if (typeof body.question !== "string" || body.question.trim() === "") {

return c.json({ error: "Invalid request body" }, 400);

}

// we will hook up our agent here

return c.json({ received: body.question });

} catch {

return c.json({ error: "Invalid JSON" }, 400);

}

});

fire(app);

Designing The Agent

Our Support Agent wont be too complicated. It will basically consist of two distinct tools, one to answer frequently asked questions and another tool to handoff questions to a human:

Implementing The Agent

Implementing the agent is a matter of connecting the dots. First, let’s create a SupportAgent class (src/support-agent.ts), specify its constructor and the public ask function:

import { Agent, run, setDefaultOpenAIKey, tool, Tool } from '@openai/agents';

import * as variables from '@spinframework/spin-variables';

import { z } from 'zod';

class SupportAgent {

private openAiApiKey: string | null = null;

public constructor() {

const key = variables.get("openai_api_key");

if (!key) {

throw new Error("open-api-key not specified in application manifest");

}

this.openAiApiKey = key

}

public async ask(question: string): Promise<string> {

// we will replace this soon

return Promise.resolve("to-be-replaced")

}

}

Before implementing ask we have to create our tools (faqTool and handoffTool). To do so, add two new private properties to the SupportAgent class and initialize them accordingly:

export public class SupportAgent {

// ...

private faqTool: Tool = tool({

name: "faq",

description: "Answers common questions about Spin and Fermyon Wasm Functions.",

parameters: z.object({ question: z.string() }),

execute: async ({ question }) => {

const normalized = question.toLowerCase();

if (normalized.includes("what is spin")) {

return "Spin is a developer tool for building and running WebAssembly applications.";

}

if (normalized.includes("what is fermyon wasm functions") ||

normalized.includes("what is fwf")) {

return "Fermyon Wasm Functions is a globally distributed serverless platform for running WebAssembly workloads on top of Akamai Cloud.";

}

return "No answer found in FAQ.";

},

});

private handoffTool: Tool = tool({

name: "human_support",

description: "Escalates the question to a human support agent.",

parameters: z.object({ question: z.string() }),

execute: async ({ question }) => {

console.log("Escalating to human support:", question);

return "Your request has been forwarded to a human support agent. They will follow up soon.";

},

});

// ...

}

For the sake of this article, the handoffTool wont do anything besides logging to stdout. A more realistic implementation could create a new support ticket by sending an HTTP request (see the Spin documentation to learn how to make outbound HTTP requests).

Update the ask function, after setting the OpenAI API key, we define the agent, register our tools and run the agent:

export public class SupportAgent {

// ...

public async ask(question: string): Promise<string> {

setDefaultOpenAIKey(this.openAiApiKey!)

const supportAgent = new Agent({

name: "SupportAgent",

model: "gpt-4.1-mini",

instructions: `You are a support agent.

Use the FAQ tool if you can answer from it.

If the FAQ tool says "No answer found", then call the human_support tool.`,

tools: [

this.faqTool,

this.handoffTool

],

});

try {

const agentResponse = await run(supportAgent, question);

return Promise.resolve(agentResponse.finalOutput!);

} catch (err) {

return Promise.reject(err);

}

}

}

Integrating the SupportAgent into the API Endpoint

Back in src/index.ts, let’s bring our SupportAgent class into scope by adding the following import statement at the beginning of the file:

import { SupportAgent } from './support-agent';

Update the handler for the /ask endpoint. Create a new instance of the SupportAgent and call its run method as shown below:

app.post("/ask", async (c: Context) => {

try {

const model = await c.req.json<QuestionModel>();

if (typeof model.question !== "string" || model.question.trim() === "") {

return c.json({ error: "Invalid request body" }, 400);

}

try{

const agent = new SupportAgent();

const response = await agent.ask(model.question);

return c.json({ message: response});

} catch (err) {

console.log(`Error while running the agent ${err}`);

return c.json({error: "Internal Server Error"}, 500);

}

} catch {

return c.json({ error: "Invalid JSON" }, 400);

}

});

Updating the Spin Manifest

Last but not least we’ve to do two important updates to the Spin manifest (spin.toml):

- Add the

openai_api_key variable and allow our component to use it

- Allow outbound HTTP connections to

https://api.openai.com from our component

Add a new [variables] block to the manifest:

[variables]

openai_api_key = { secret = true, required = true }

To allow our component using the variable, we have to add the following TOML:

[component.support-agent.variables]

openai_api_key = "{{ openai_api_key }}"

In the component configuration block ([component.support-agent]), add or update the allowed_outbound_hosts array to allow connections to the OpenAI API:

[component.support-agent]

source = "dist/support-agents.wasm"

exclude_files = ["**/node_modules"]

allowed_outbound_hosts = ["https://api.openai.com"]

Acquire an OpenAI API Key

Before we can run our Support Agent, we must source an OpenAI API Key. Head over to the OpenAI API Platform and create a new API Key.

Testing the Support Agent

Use spin up to run the application on your local machine, by default it will start the application on port 3000:

SPIN_VARIABLE_OPENAI_API_KEY=<YOUR_KEY_HERE> spin up --build

The command will block your terminal and provide the actual endpoint of your Support Agent:

Building component support-agent (2 commands)

Running build step 1/2 for component support-agent with 'npm install'

up to date, audited 203 packages in 624ms

43 packages are looking for funding

run `npm fund` for details

found 0 vulnerabilities

Running build step 2/2 for component support-agent with 'npm run build'

> support-agent@1.0.0 build

> npx webpack && mkdirp dist && j2w --initLocation http://support-agent.localhost -i build/bundle.js -o dist/support-agent.wasm

Componentizing...

Component successfully written.

Finished building all Spin components

Logging component stdio to ".spin/logs/"

Serving http://127.0.0.1:3000

Available Routes:

support-agent: http://127.0.0.1:3000 (wildcard)

Use a tool like curl to send an HTTP request to http://localhost:3000/ask as shown in the next snippet:

curl --json '{"question": "What is Spin?"}' http://localhost:3000/ask

You should see the Support Agent responding with something similar to this:

{

"message":"Spin is a developer tool for building and running WebAssembly applications."

}

Next, ask a question which is not part of the FAQs:

curl --json '{"question": "How do I use kv store when building a spin application with python?"}' http://localhost:3000/ask

You’ll see the handoffTool responding with a JSON message like this:

{

"message":"I have forwarded your question to a human support agent who will assist you shortly with using KV store when building a Spin application with Python. If you have any other questions, feel free to ask!"

}

Additionally, it will print the following to stdout of the spin up process:

Escalating to human support:

How do I use kv store when building a spin application with python?

Deploying the Support Agent to Fermyon Wasm Functions

For deploying the Support Agent to Fermyon Wasm Functions, you can use the sub-commands of spin aka as shown in the following snippet:

# Compile the latest source code down to Wasm

spin build

# Deploy the Spin application to Fermyon Wasm Functions

spin aka deploy --create-name support-agent \

--variable openai_api_key=<YOUR_OPEN_AI_API_KEY> \

--no-confirm

Deploying workloads to Fermyon Wasm Functions takes roughly 60 seconds. As part of the deployment, your application be placed on all service regions across the globe to ensure compute could happen as close to your users as possible and to minimize network latency. The spin aka deploy command will respond with the public endpoint (URL) of your Support Agent.

Once you’ve received the application URL, you can use curl again to interact with the Support Agent running on Fermyon Wasm Functions:

curl --json '{"question": "What is Fermyon Wasm Functions"}' <YOUR_APPLICATION_URL>/ask

Again, the Support Agent should respond with a message similar to this:

{

"message": "FWF stands for Fermyon Wasm Functions. It is a globally distributed serverless platform designed for running WebAssembly workloads on top of the Akamai Cloud. If you have any more questions about it, feel free to ask!"

}

Conclusion

As you’ve seen, building AI Agents doesn’t have to be complicated. With the OpenAI Agents SDK, you can go from defining a simple agent to wiring it up with tools and handoffs in just a few lines of TypeScript. The abstractions provided by the SDK handle the orchestration, so you can focus on what matters: the capabilities your agent should expose and the problems it should solve.

When combined with Spin, agents can be packaged as lightweight WebAssembly components and deployed effortlessly.

Running these agents on Fermyon Wasm Functions results in an ultra-performant, globally distributed, and fully managed AI agent — powered by Akamai’s cloud backbone.

This gives developers the ability to run AI-powered applications closer to users, at edge scale, without the operational burden of managing infrastructure.

The result is a new class of intelligent, serverless workloads: easy to build, simple to deploy, and ready to run anywhere in the world. Want to give it a try yourself? Sign up for Fermyon Wasm Functions today